AI's Electric Appetite

Source: Reuters

The Hidden Cost of Our AI Revolution

It's fascinating to reflect on how quickly AI has integrated into our daily lives, and ChatGPT is a prime example of that rapid embrace. While its capabilities astound and assist millions, the infrastructure powering such technology remains largely behind the scenes. These specialized computer chips, though small in form, carry a colossal energy appetite. The thirst for advanced tech has accelerated our push into this digital age, but at what ecological price?

By 2027, the anticipated electrical demand of AI servers stands at a staggering 85 to 134 terawatt hours annually. That’s about what Argentina, the Netherlands and Sweden each use in a year, or about 0.5 percent of the world's current electricity use. Furthermore, in 2022 alone, data centers that power all computers, including Amazon’s cloud and Google’s search engine, guzzled about 1-1.3% of the world's electricity. And that doesn’t include cryptocurrency mining, which added another 0.4%.

The fuel source of these data centers adds another layer of complexity. If largely dependent on fossil fuels, the electricity essential for AI's operation could become a significant contributor to global carbon emissions.

This presents a critical junction for stakeholders at all levels. While AI's transformative potential is undeniable, it's imperative to intertwine its evolution with sustainable measures. As we push the boundaries of what's possible with AI, we must also ensure our ambitions don't cast a shadow too large for our planet to bear. Our commitment to a digital future should be matched, if not exceeded, by our dedication to a greener tomorrow.

Guesstimating Energy Use

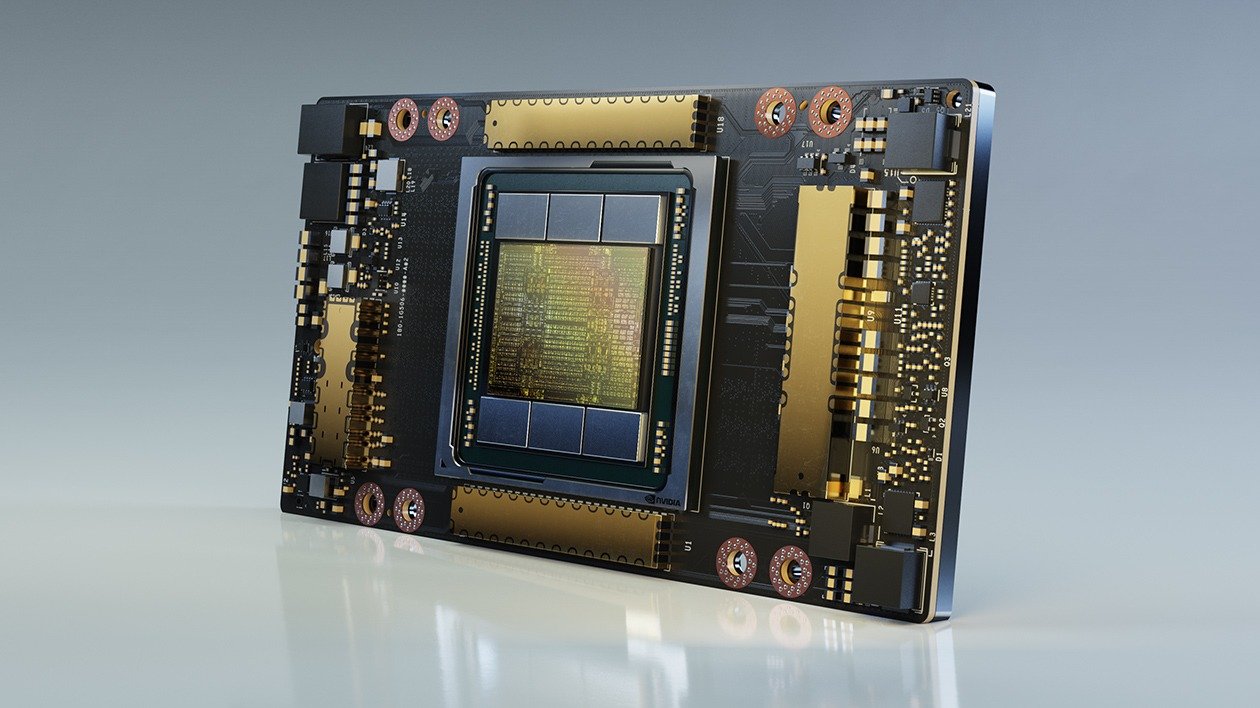

It’s impossible to precisely quantify AI’s energy use; companies like OpenAI reveal very few details, including how many specialized chips they need to run their software. So, electricity consumption estimates have been made using projected sales of Nvidia A100 servers – the hardware used by 95% of the AI market.

A recent projection assesses that Nvidia could ship 1.5 million of these servers by 2027. Multiply that number by server electricity use – 6.5 kilowatts for Nvidia’s DGX A100 servers, for example, and 10.2 kilowatts for its DGX H100 servers.

Customers might use the servers at less than 100% capacity, lowering electricity consumption. However, server cooling and other infrastructure would push the total higher.

NVIDIA A100 Tensor Core GPU / Source: Nvidia

Nvidia’s Lock on AI

Nvidia has built a decisive lead in AI hardware and will likely maintain it for a few years, but rivals are racing to catch up. There is a limited supply of Nvidia chips, acting as bottleneck for AI growth, and that means that companies of all sizes are clamoring to find their own supply of chips.

In an emailed statement, Nvidia boasted that the company’s specialized chips are better than other options, stating that it would take many more conventional chips to accomplish the same tasks. “Accelerated computing on Nvidia technology is the most energy efficient computing model for AI and other data center workloads,” the company said.

Pump the Brakes?

Some experts are urging companies to factor in electricity consumption when designing the next generation of AI hardware and software. But that’s proving to be a tough sell as companies rush to enhance their AI models. In this swift pace of innovation, many advise a moment of pause: to harness existing solutions rather than incessantly pursuing speed and precision. We must deeply consider the environmental toll and understand the long-term implications. After all, innovation shines brightest when it respects the world it aims to transform. Onward, but with care.